Your brand is likely invisible inside AI conversations. While your traditional SEO dashboards show strong traffic, you have a massive blind spot: you don't know if ChatGPT, Gemini, or Claude are recommending your products. When a potential customer asks an AI for a solution, your competitors get all the credit, and you wouldn't even know it. This isn't just a monitoring problem; it’s a revenue problem.

Most marketing teams are flying blind with tools built for a pre-AI internet.

Standard observability platforms track application performance, not brand citations in Large Language Models (LLMs). SEO software is still fixated on keyword rankings in search engines. This leaves a critical intelligence gap. Without the right data, you can't know why AI models ignore you or what specific actions will earn a coveted mention.

This guide breaks down the best AI visibility service providers to solve this problem. We move beyond vanity metrics to focus on actionable intelligence. Each platform analysis includes screenshots, links, and a clear assessment of its strengths. You will find practical guidance to select the right tool to expose content gaps, analyze competitor mentions, and build a roadmap for getting your brand cited by AI.

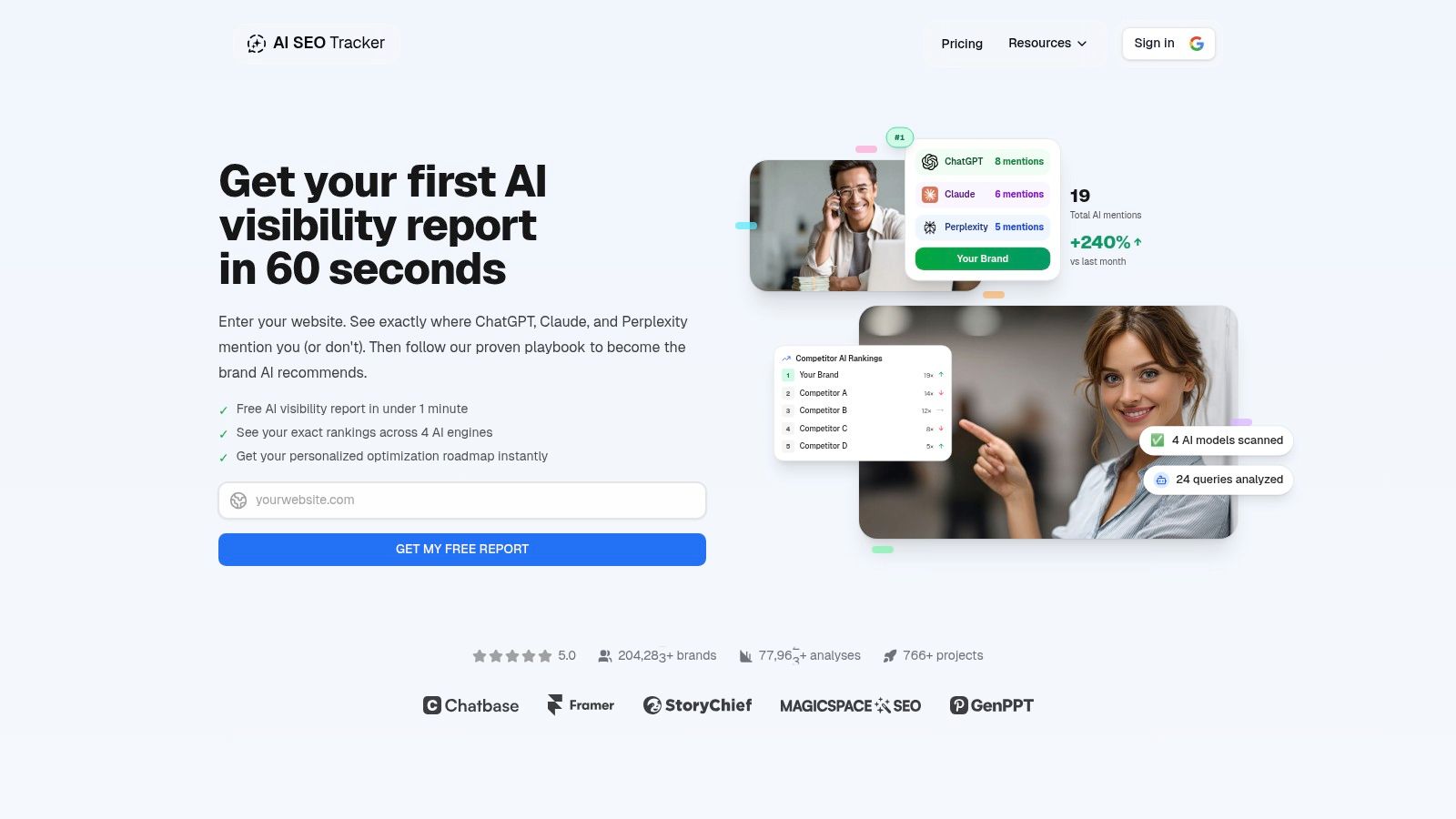

1. AI SEO Tracker

AI SEO Tracker is built for brands that need to master their presence within Large Language Model (LLM) responses. Unlike traditional SEO tools, this platform provides direct insight into how your brand is represented across major AI engines like ChatGPT, Gemini, Claude, and Perplexity. It’s a purpose-built tool for the next era of search, making it one of the best AI visibility service providers for marketing teams.

The platform’s core strength is translating AI visibility data into a concrete strategy. It moves beyond simple rank tracking to reveal the specific prompts real customers are using, where you rank in AI-generated answers, and what your competitors are doing right. This focus on outcome-driven metrics is crucial for proving ROI.

Key Features and Use Cases

AI SEO Tracker excels with its practical, hands-on tools. The Page Inspector feature is a significant differentiator. It scans any URL from the perspective of an LLM, exposing content issues that humans might miss, such as invisible text or poorly structured data that models can't parse.

Mini Case Study: A B2B SaaS company used the platform and found its pricing page was never cited because pricing was hidden behind a "request a demo" form. The Page Inspector flagged this as a crawlability issue for LLMs. They followed the recommendation to add a static pricing table, and within weeks, their product was being included in AI answers for "How much does [Product] cost?" prompts, directly influencing buyer consideration.

The platform provides a prioritized optimization roadmap with prescriptive fixes, such as creating new comparison pages or filling specific content gaps. These insights are complemented by dashboards that track your AI share-of-voice and competitive positioning. You can explore additional details about the best AI visibility products to round out your approach.

Practical Assessment

Pros:

- Multi-LLM Tracking: Monitors brand mentions and positioning across four key AI engines (ChatGPT, Gemini, Claude, Perplexity).

- Actionable Page-Level Fixes: The Page Inspector provides specific, technical recommendations to make your content AI-friendly.

- Rapid Insights: The free visibility report offers a comprehensive audit and optimization plan in about 60 seconds.

- Competitive Intelligence: Dashboards reveal AI share-of-voice, highlight competitor gaps, and send real-time alerts.

Cons:

- Pricing Transparency: Detailed pricing is not publicly listed on the homepage, requiring contact with sales for enterprise plans.

- Effort-Dependent Results: The platform provides the roadmap, but achieving significant gains (like the company-reported +240% in mentions) requires consistent implementation of content and technical fixes.

Website: https://aiseotracker.com

2. Datadog

Datadog extends its established observability platform into the AI space. Rather than offering a standalone AI tool, Datadog integrates LLM Observability directly into its existing APM and infrastructure monitoring products. This approach is ideal for engineering and DevOps teams who need a unified view of their entire stack, from the GPU to the end-user application.

The platform excels at connecting AI performance with underlying system health. For instance, you can trace a high-latency response from an LLM-powered chatbot back to a specific service, view the corresponding logs, and check the infrastructure metrics for that host, all within a single interface. This holistic context is a significant advantage for complex troubleshooting. To gain a broader perspective on how AI is shaping the marketing landscape, delve into a detailed analysis of the future of B2B marketing with AI.

Key Features & Considerations

| Feature | Details |

|---|---|

| Unified Observability | Combines LLM usage, cost, and latency tracking with APM, logs, metrics, and RUM. |

| Full-Stack Tracing | Distributed tracing links AI model performance directly to application and infrastructure health. |

| Integrations | Extensive ecosystem of integrations with cloud providers, frameworks, and MLOps tools. |

| Pricing Model | Can become complex and costly at scale, requiring careful management of data ingestion. |

Pros:

- Reduces Tool Sprawl: A single vendor for infrastructure, application, and AI visibility simplifies operations.

- Scalability: Proven to handle massive estates with robust, enterprise-grade features.

Cons:

- Cost & Complexity: Pricing can be a significant investment and is notoriously difficult to forecast.

- Vendor-Level Volatility: Some market analysts, like those cited by Seeking Alpha in 2023, have noted volatility in the company's growth trajectory.

Website: https://www.datadoghq.com

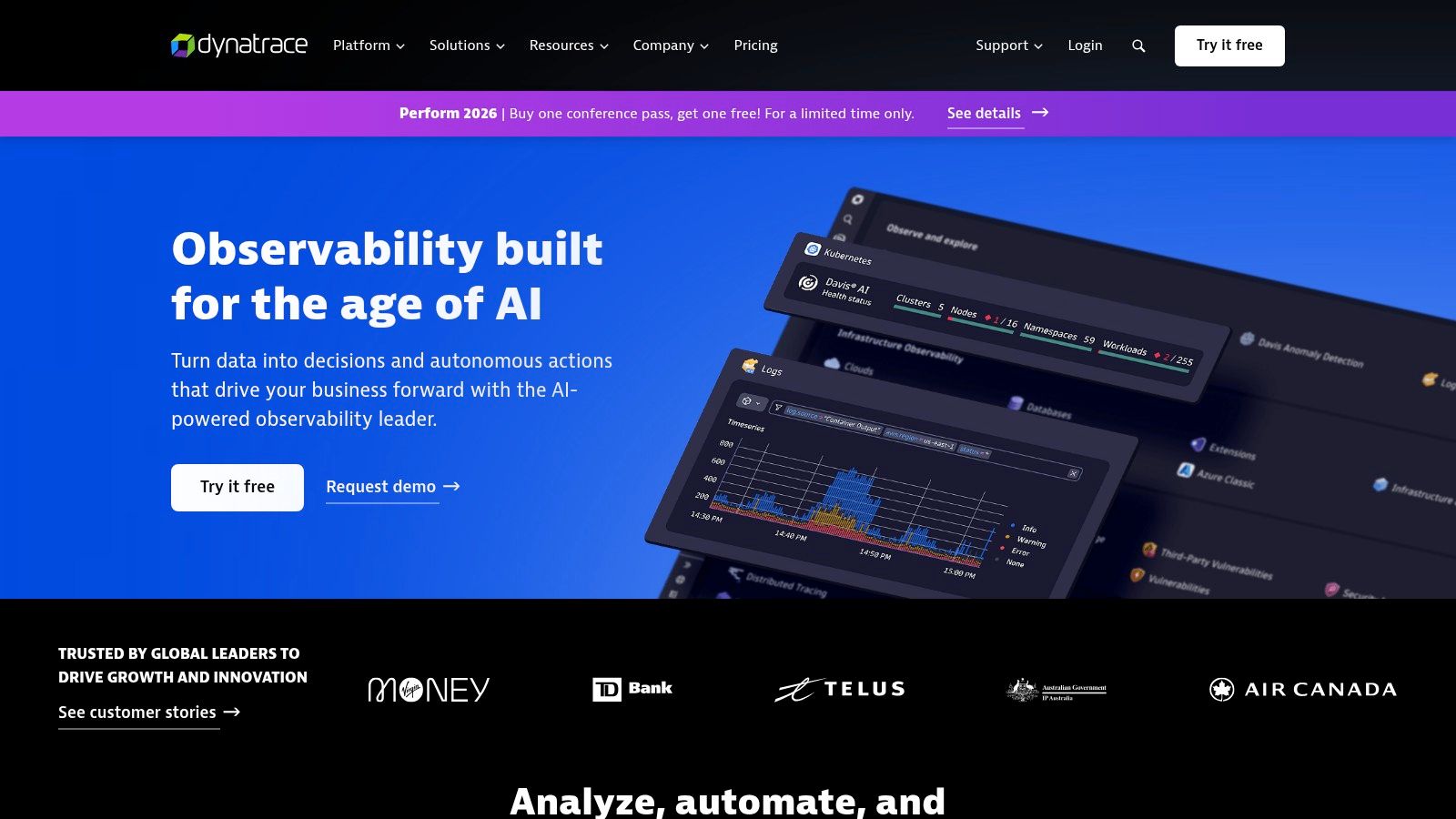

3. Dynatrace

Dynatrace brings its enterprise-grade observability and security platform to the AI/LLM space, positioning itself as a premium choice for complex, regulated deployments. Its core strength lies in its Grail data lakehouse and Davis AI, which provide automated, causality-driven analysis. This makes Dynatrace ideal for organizations that need precise root-cause analysis, particularly in hybrid or on-premise AI environments.

The platform offers end-to-end visibility across the entire AI stack, from LLMs to the underlying NVIDIA GPU infrastructure. Instead of just presenting data, Davis AI automatically detects anomalies and pinpoints the exact cause, saving teams from manual correlation. For enterprises managing significant AI spend, its cost forecasting features for LLM usage provide critical budget oversight.

Key Features & Considerations

| Feature | Details |

|---|---|

| Automated Root-Cause Analysis | Davis AI automatically identifies anomalies and their precise root causes across the AI stack. |

| End-to-End AI Stack Visibility | Comprehensive monitoring for LLMs, GPUs, vector databases, and orchestration frameworks. |

| Cost Forecasting | Provides insights and forecasting for LLM usage to help manage operational costs. |

| Enterprise & Hybrid Focus | Strong support for complex hybrid and on-premise AI deployments. |

Pros:

- Automated, Precise Insights: Davis AI provides exact root causes with topology context, eliminating guesswork.

- Strong for Hybrid/On-Prem: Well-suited for large enterprises with complex, non-cloud-native AI infrastructure.

Cons:

- Premium Pricing & Complexity: Can be an expensive and complex solution, potentially overkill for smaller teams.

- Consumption-Based Model: Enterprise licensing tied to data volume can make costs hard to predict.

Website: https://www.dynatrace.com

4. New Relic

New Relic positions itself as a comprehensive observability platform that extends its powerful APM into the AI domain. It's a strong choice for organizations that want a single, unified interface for all their telemetry data. The platform's dedicated AI Monitoring (AIM) is designed to provide full visibility across the AI stack, including emerging support for complex agentic systems.

The key advantage of New Relic lies in its unified data platform, which allows teams to correlate AI model performance directly with user experience and infrastructure health. For engineering teams already using New Relic, adding AI visibility feels like a natural extension. With a clear commitment to evolving its agentic AI monitoring, it appeals to those building next-generation multi-step AI agents.

Key Features & Considerations

| Feature | Details |

|---|---|

| Unified AI Monitoring | Integrates AI model and agent monitoring with APM, infrastructure, logs, and RUM in one UI. |

| Agentic/MCP Support | Provides emerging support for agentic systems through integrations like DeepSeek and MCP server. |

| Evolving AI Roadmap | Company shows a clear focus on ongoing AI-focused enhancements and community resources. |

| Plan-Dependent Features | Access to the most advanced AI monitoring may require enterprise-level plans. |

Pros:

- Broad Ecosystem: A well-established player with consistent industry recognition and extensive integrations.

- Future-Focused: Clear roadmap for advanced monitoring of agentic AI systems.

Cons:

- Gated Features: Top-tier AI features might be locked behind enterprise plans, requiring direct sales contact.

- Tiered Capability: The depth of AI monitoring insights can vary significantly depending on your subscription plan.

Website: https://newrelic.com

5. Splunk Observability (Cisco)

Splunk Observability, now part of Cisco, extends its powerful enterprise telemetry capabilities into the AI domain. It is particularly well-suited for large organizations already using Splunk or Cisco technologies. The platform's approach centers on unifying network, infrastructure, application, and AI visibility. This makes it an excellent choice for teams needing to correlate AI agent performance with underlying network and system behavior.

The platform introduces AI Agent Monitoring, designed to track the quality, security, and cost of LLMs. By integrating data from AppDynamics, Observability Cloud, and ThousandEyes, Splunk offers deep, network-to-application correlation. This allows a DevOps team to trace a slow AI-driven feature not just to a container but all the way back to a specific network issue.

Key Features & Considerations

| Feature | Details |

|---|---|

| AI Agent Monitoring | Provides insights into the quality, security, and cost of generative AI agents and LLMs. |

| Unified Visibility | Integrates telemetry from AppDynamics, Observability Cloud, and ThousandEyes for a comprehensive view. |

| Network-to-App Correlation | Links application performance directly to network health and infrastructure. |

| Licensing Complexity | Licensing and modular SKUs through Splunk/Cisco sales channels can add complexity. |

Pros:

- Deep Enterprise Telemetry: Exceptional depth of data collection, especially with existing Cisco integrations.

- Mature Ecosystem: Strong event correlation and proven troubleshooting tools for enterprise-scale issues.

Cons:

- Roadmap Dependent: Some advanced AI features are still in private preview, making them dependent on the future roadmap.

- Complex Procurement: Licensing and navigating the various product SKUs can be a significant undertaking.

Website: https://www.splunk.com

6. Langfuse

Langfuse offers a powerful open-source foundation for LLM observability, making it a favorite among developers and startups who prioritize transparency and control. It provides a suite for tracing, evaluation, prompt management, and cost tracking, available as both a self-hosted open-source solution and a managed cloud service. This flexibility allows teams to start quickly and evolve their setup as needed.

The platform is designed with developer-friendly ergonomics. Langfuse excels at granular cost and token tracking, allowing teams to pinpoint expensive operations and optimize their LLM usage effectively. Its integrated "LLM-as-a-judge" evaluation framework and dataset management provide a complete toolkit for not just monitoring but actively improving model performance, making it one of the best AI visibility service providers for hands-on teams.

Key Features & Considerations

| Feature | Details |

|---|---|

| Open-Source Core | Provides a free, self-hostable OSS version for maximum control and data privacy. |

| Comprehensive Tooling | Integrates tracing, prompt management, cost tracking, and LLM-based evaluations. |

| Flexible Deployment | Offers both a managed cloud service and the open-source option for customization. |

| Pricing Model | Uses usage-based units for its cloud plans, which may require a learning curve to forecast costs. |

Pros:

- Fast Adoption: A generous free tier, OSS availability, and clear documentation make it easy to get started.

- Active Development: The project sees frequent releases and has even announced public price reductions, signaling a focus on user value.

Cons:

- Infrastructure Overhead: Self-hosting the open-source version requires managing your own infrastructure.

- Unit-Based Pricing: The cloud platform's pricing model, while transparent, can be unfamiliar compared to per-seat models.

Website: https://langfuse.com

7. LangSmith (by LangChain)

LangSmith, from the creators of the LangChain framework, is a specialized observability platform for LLM applications and agents. It provides tools for tracing, monitoring, and evaluating model performance, regardless of whether your application is built with LangChain. This makes it a compelling choice for development teams building complex, agent-based systems who need granular insights.

The platform's deep integration with agentic workflows is a key differentiator. It allows you to monitor not just individual LLM calls but the entire multi-step reasoning process of an agent. LangSmith also addresses critical enterprise needs with flexible hosting options, including cloud, hybrid, and fully self-hosted deployments, providing control over data privacy.

Key Features & Considerations

| Feature | Details |

|---|---|

| Agent-Centric Tooling | Tracing, monitoring, and evaluation tools built specifically for LLM agents and chains. |

| Flexible Hosting | Offers cloud, hybrid, and self-hosted deployment options to meet security needs. |

| Comprehensive Evals | Supports online and offline evaluations with datasets and annotation queues to test prompts. |

| Deployment Capabilities | Includes agent deployment functionality with uptime metrics and cost tracking. |

Pros:

- Deep Framework Integration: Unparalleled visibility for applications built with LangChain and LangGraph.

- Enterprise-Ready: Self-hosting options and region controls (US/EU) appeal to organizations with strict data governance.

Cons:

- Cost Scaling: Usage-based costs for traces can increase significantly with high-volume applications.

- Retention Planning: Trace retention limits on standard plans may require purchasing extended retention, adding to the cost.

Website: https://www.langchain.com/langsmith

8. Arize (Phoenix / Arize AX)

Arize takes a developer-first approach, offering an open-source on-ramp (Phoenix) that scales into a managed enterprise solution (Arize AX). This allows teams to start with local tracing and evaluation tools and then transition to a production-grade platform. It is designed to handle the complex, high-volume data generated by LLM applications.

The platform stands out by bridging the gap between local development and production monitoring. Teams can trace and visualize agent graphs on their local machines with Phoenix. This same data structure then feeds into Arize AX for robust monitoring at scale. This makes Arize one of the more flexible options among the best AI visibility service providers. If you're looking for more detail, you can find a comprehensive review of the most accurate AI visibility metrics software.

Key Features & Considerations

| Feature | Details |

|---|---|

| OSS-First Approach | Start with the open-source Phoenix library for local tracing before committing to a managed service. |

| Agent Tracing | Provides clear visualizations of agent decision paths, tool usage, and sub-task performance. |

| Transparent Pricing | Pro tier features published overage rates for spans and data ingestion, simplifying cost forecasting. |

| Unified Workflow | Supports the full AI lifecycle, from offline evaluation datasets to online production monitors. |

Pros:

- OSS On-Ramp: Allows teams to adopt tools for free in development, easing the transition to a paid production platform.

- Competitive & Transparent Pricing: Usage-based model with clear overage rates on its Pro tier is attractive for startups.

Cons:

- Enterprise Features: Accessing advanced features like longer data retention or SSO often requires engaging with the sales team.

- Potential Cost at Scale: High-volume usage and extended retention needs can lead to increased costs.

Website: https://arize.com

9. Fiddler

Fiddler carves out a niche in AI observability by placing a strong emphasis on model governance, risk, and compliance. It's designed for teams in highly regulated industries or those with risk-sensitive applications who need more than just performance metrics. The platform provides robust monitoring for performance and drift while layering on features for explainability, fairness analytics, and real-time LLM guardrails.

Its standout capability lies in production-level enforcement, actively blocking issues like hallucinations, toxicity, PII leaks, and prompt injection attacks. This makes Fiddler one of the best AI visibility service providers for organizations that require a unified solution for both classical machine learning models and modern LLMs, ensuring consistent governance.

Key Features & Considerations

| Feature | Details |

|---|---|

| Real-Time LLM Guardrails | Actively monitors and enforces policies against hallucinations, toxicity, and prompt injection. |

| Bias & Fairness Analytics | Offers dedicated tools to detect and mitigate bias in model outputs. |

| Unified Monitoring | Provides a single pane of glass for both traditional ML models and large language models. |

| Deployment Flexibility | Available as a SaaS solution or can be deployed on-premises for maximum data control. |

Pros:

- Strong Responsible-AI Focus: Excellent for teams prioritizing governance, fairness, and compliance.

- Unified Platform: Manages both classical ML and LLM monitoring, reducing tool fragmentation.

Cons:

- Complex Pricing: Precise costs require direct scoping based on data volume and the number of models.

- Add-On Features: Some advanced capabilities are offered as add-ons, which can increase the total price.

Website: https://www.fiddler.ai

10. Sentry

Sentry extends its developer-centric application monitoring to the AI stack, making it a natural fit for teams who already rely on it for error tracking. Its approach is uniquely code-first, directly connecting AI pipeline failures to the specific code commit and developer responsible. This makes it an excellent choice for engineering teams focused on debugging and iterating quickly.

The platform provides end-to-end tracing for agent runs, capturing everything from the initial prompt to tool spans and final outputs. Its optional Seer AI debugger even suggests code fixes for identified issues. This tight integration into existing Sentry workflows lowers the adoption barrier, making it one of the more accessible best ai visibility service providers for development teams.

Key Features & Considerations

| Feature | Details |

|---|---|

| Code-Centric Tracing | Links AI agent and LLM issues directly to code commits, pull requests, and owning teams. |

| Full Pipeline View | End-to-end tracing captures prompts, tool spans, outputs, and tracks token usage and costs. |

| Developer Workflow | Integrates AI monitoring into the familiar Sentry interface for error tracking. |

| AI Debugger (Seer) | An optional add-on that uses AI to analyze issues and propose code fixes. |

Pros:

- Developer-First Experience: Ties AI failures directly to the underlying code, streamlining the debugging process.

- Familiar Interface: Teams already using Sentry can adopt AI monitoring with a minimal learning curve.

Cons:

- Beta Features: Some of the more advanced AI monitoring capabilities are still in beta and may evolve.

- Add-On Costs: The Seer AI debugger requires a separate paid subscription after the initial trial period.

Website: https://sentry.io/for/llm-monitoring/

11. Palo Alto Networks (AI Access Security)

Palo Alto Networks approaches AI visibility from a pure security and governance perspective, making it a critical tool for enterprises concerned with "shadow AI." Its AI Access Security is designed to discover, monitor, and control the use of generative AI applications across the organization. This platform is less about model performance and more about applying data protection and access policies to prevent data leakage.

The system uses a dynamic catalog of over 2,250 GenAI apps to provide comprehensive visibility into what services employees are using. For security and IT teams, this means they can move from a reactive to a proactive stance, setting up GenAI policy templates and monitoring threats. Its strength lies in integrating these controls directly into the existing network security fabric.

Key Features & Considerations

| Feature | Details |

|---|---|

| GenAI App Discovery | Provides visibility into thousands of GenAI apps using a dynamic catalog. |

| Policy & DLP | Offers default GenAI policy templates and integrates with Data Loss Prevention (DLP). |

| Last-Mile Browser Controls | Prisma Access Browser can enforce granular controls like disabling copy/paste for specific AI apps. |

| Platform Integration | Natively integrates with the broader Palo Alto Networks security ecosystem. |

Pros:

- Strong Security Posture: Integrates powerful policy, DLP, and threat analytics with established network security.

- Comprehensive Control: Manages both sanctioned SaaS use and browser-level access for robust control over data.

Cons:

- Platform Dependency: Maximum value is realized within the broader Palo Alto ecosystem.

- Complex Procurement: Licensed features typically require purchasing through partners and a more involved integration process.

Website: https://docs.paloaltonetworks.com/ai-access-security

12. Netskope

Netskope shifts the focus of AI visibility from application performance to security and governance, making it a critical tool for risk and compliance teams. The Netskope One platform is designed to combat "shadow AI" by discovering and controlling the use of both managed and unmanaged AI applications. This approach is valuable for organizations already invested in a SASE or CASB security framework.

The platform provides a dedicated AI Dashboard that offers clear insights into which AI apps are being used, what actions users are taking, and associated risk levels. A key differentiator is its app risk classification, which details whether an AI vendor's policy includes training their models on your corporate data. This security-first perspective makes Netskope one of the best AI visibility service providers for enterprises looking to enforce governance.

Key Features & Considerations

| Feature | Details |

|---|---|

| AI Dashboard | Provides a centralized view of AI apps, user activity, and potential risks. |

| App Risk Classification | Rates AI services based on risk profiles, including their data usage and model training policies. |

| Data Loss Prevention (DLP) | Extends existing DLP policies to monitor and block sensitive data from being sent to AI apps. |

| Shadow AI Discovery | Identifies and reports on unsanctioned AI application usage across devices. |

Pros:

- Strong Governance Posture: Excellent for enforcing data protection policies and providing executive-level reporting on AI risk.

- Integrates with SASE/CASB: Complements existing security programs to effectively manage shadow AI threats.

Cons:

- Licensing Dependencies: Full features are tied to specific Netskope platform licensing tiers.

- Implementation Effort: Realizing the full value requires a dedicated rollout and policy configuration effort.

Website: https://www.netskope.com/solutions/securing-ai

Top 12 AI Visibility Providers — Comparison

| Product | Core features | Unique features (✨) | Target audience (👥) | Pricing / Value (💰) | Quality (★) |

|---|---|---|---|---|---|

| AI SEO Tracker 🏆 | AI citation visibility across ChatGPT, Gemini, Claude, Perplexity; Page Inspector; dashboards; revenue calc | ✨ AI-specific rankings, Page Inspector + prescriptive playbooks | 👥 SaaS marketing teams, SEO managers, growth founders, agencies | 💰 Free 60s visibility report; ROI calculator; contact sales for plans | ★★★★★ Outcome-focused; reported +240% AI mentions |

| Datadog | LLM observability + APM, logs, metrics, RUM; LLM cost/latency tracking | ✨ Unified infra+AI telemetry with broad integrations | 👥 Large infra/app teams and enterprises | 💰 Enterprise pricing; can be costly at scale | ★★★★ Analyst-validated, scalable |

| Dynatrace | End-to-end AI stack visibility (LLMs, GPUs, vector DBs); automated RCA via Davis AI | ✨ Topology-aware automated causation and remediation | 👥 Large enterprises; regulated & hybrid/on‑prem deployments | 💰 Premium enterprise licensing and consumption pricing | ★★★★ Strong enterprise automation |

| New Relic | Unified APM, infra, logs, RUM + AI monitoring (agents/MCP) | ✨ Agentic AI monitoring roadmap (DeepSeek, MCP) | 👥 DevOps, SREs, infra teams wanting one UI | 💰 Tiered plans; advanced AI features often on enterprise tiers | ★★★★ Broad ecosystem recognition |

| Splunk Observability (Cisco) | Enterprise telemetry, AI Agent Monitoring, network→app correlation | ✨ Cisco/Splunk integrations and large-scale event correlation | 👥 Enterprises using Splunk/Cisco stacks | 💰 Modular SKUs; licensing complexity via sales | ★★★★ Mature enterprise tooling |

| Langfuse | Tracing, evals, datasets, prompt mgmt; OSS + managed cloud | ✨ Open-source core with clear tiered pricing | 👥 LLM engineers, startups, researchers | 💰 Transparent tiers; generous OSS/free options | ★★★ Fast to adopt; developer-friendly |

| LangSmith (by LangChain) | Tracing, monitoring, evals, agent deployments; OTel support | ✨ Tight agent-framework integration; flexible hosting (cloud/hybrid/self-hosted) | 👥 Teams using LangChain/agent frameworks | 💰 Per-seat + usage; startup plan available | ★★★★ Good for agent-based apps |

| Arize (Phoenix / Arize AX) | Tracing/evals (OSS Phoenix) + managed Arize AX; token/cost tracking | ✨ OSS on-ramp + production-ready managed tiers | 👥 ML/LLM dev→prod teams | 💰 Usage-based pricing with published overage rates | ★★★★ OSS-first with transparent pricing |

| Fiddler | LLM guardrails, drift, explainability, fairness analytics | ✨ Real-time guardrails (hallucination, PII, toxicity) & governance | 👥 Risk-sensitive, regulated teams (governance) | 💰 Enterprise scoping; add-ons may increase cost | ★★★★ Strong responsible-AI focus |

| Sentry | End-to-end agent/LLM tracing, token/cost tracking, AI debugger (Seer) | ✨ Developer-first AI debugger tied to commits/code | 👥 Product & engineering teams | 💰 Developer plans; Seer is a paid extra after trial | ★★★★ Dev-centric, quick SDK adoption |

| Palo Alto Networks (AI Access Security) | GenAI app discovery/catalog, DLP, policy controls (Prisma/NGFW) | ✨ Catalog of 2,250+ GenAI apps; last‑mile browser controls | 👥 Security teams and large enterprises | 💰 License-dependent; best value within Palo Alto platform | ★★★★ Strong policy & DLP controls |

| Netskope | AI dashboard, app risk classification, DLP, vendor training visibility | ✨ App risk profiles + vendor training insights for shadow AI | 👥 Enterprises standardizing on SASE/CASB | 💰 Platform licensing; rollout effort required | ★★★★ Robust governance & reporting |

From Visibility to Action: Your Next Move

The AI visibility market is not one-size-fits-all. The "best" provider depends entirely on what you're trying to see.

An engineering team debugging a Retrieval-Augmented Generation (RAG) pipeline has different needs than a marketing team trying to get their brand featured in AI Overviews. The tools are specialized because the problems are specialized.

Choosing Your Lens: Engineering vs. Marketing

For technical teams, platforms like Datadog and New Relic provide a familiar observability framework. They excel at monitoring infrastructure health and performance. LLM-native solutions like Langfuse and LangSmith offer granular, trace-level insights invaluable for developers building AI applications. Security teams will find robust governance in tools like Palo Alto Networks and Netskope.

But for marketers, SEO managers, and growth leaders, the critical problem is capturing demand as it shifts from search engine results pages to direct AI answers. This requires a different kind of visibility—one focused on content, citations, and brand mentions within generative AI.

Don't buy a complex observability suite if your goal is to increase organic traffic from AI chat.

Realistic Example: A B2B SaaS company that provides project management software uses Sentry to catch errors in their new AI-powered task-sorting feature. This is a classic engineering use case. However, their marketing team’s goal is to ensure that when a user asks an AI chatbot, "what is the best software for managing agile sprints," their product is recommended. For this, Sentry is irrelevant. The marketing team needs to know why their content isn't being cited.

How to Select the Right Provider for Your Team

Before you sign a contract, follow this evaluation process to avoid buying a powerful tool for the wrong problem.

- Define Your Core Problem: Are you fixing bugs in an LLM (engineering), or getting cited by LLMs (marketing)? Be specific.

- Identify Key Stakeholders: Who is the primary user? A DevOps engineer needs dashboards with latency rates. A content strategist needs reports on brand sentiment in AI responses.

- Run a Pilot or Trial: Never commit based on a demo. Use a free trial to test the tool with your actual data. For marketers, this means getting a baseline report to see your current AI visibility versus your top three competitors.

- Evaluate Actionability: The most important question is: does this tool tell me what to do next? A great AI visibility tool doesn’t just show you a problem; it provides a specific, prioritized plan to fix it.

A good tool won't just report that a competitor is cited 50 times. It will identify the specific articles being used as sources, analyze their content structure, and provide a clear brief for creating content that can compete for that citation. That is the difference between data and a growth strategy.

The world of AI visibility is splitting into technical observability and marketing intelligence. Recognizing which path you’re on is the most critical first step. The goal isn't just to see what AI is doing; it’s to influence its output to drive tangible business results.

Ready to move from data to action? AI SEO Tracker is built for marketers to monitor, analyze, and increase their visibility in AI search answers. See where you stand today with a free analysis from AI SEO Tracker.